HoloHands: 3D Navigation and Computer Controll with Gestures.

INSPIRATION AND DEVLOG

The scene in Iron Man where Tony Stark effortlessly manipulates holographic displays with just his hands sparked a fire

within me. It wasn't just the futuristic tech wizardry that captivated me, but the sheer intuitiveness of it all. No

clunky controllers, no awkward interfaces, just the natural language of gesture dictating the digital world. That's when

the seed of an idea was sown: to create software that would bridge the gap between the physical and digital, using the

webcam as a portal to a world of 3D interaction. Although there might exist VR application, I believe computer software would access to a much wider audience.

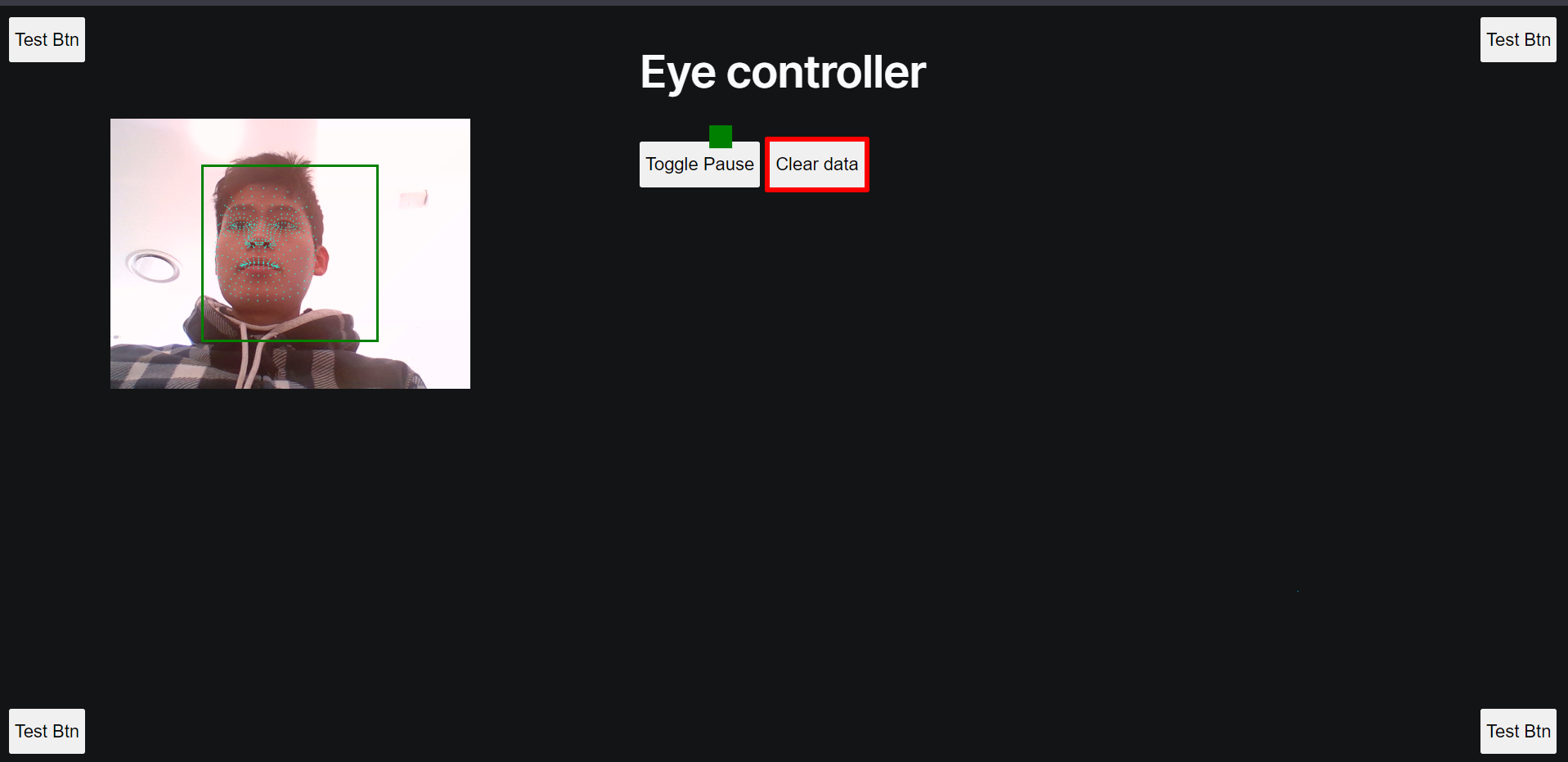

Webglazer was my approach initially. Yet, reality proved stubbornly uncooperative. Fluctuations plagued every

glace. Even a heavily trained dataset on controlled environment couldn't tame the imprecision for just picking the correct button effortlessly,

transforming my smooth interactions into a digital rollercoaster. It simply forced me to recalibrate, to explore other avenues, to refine my approach. The

path to intuitive hand-gesture interaction might not lie with Webglazer, but the detour it provided has only made the

destination clearer.

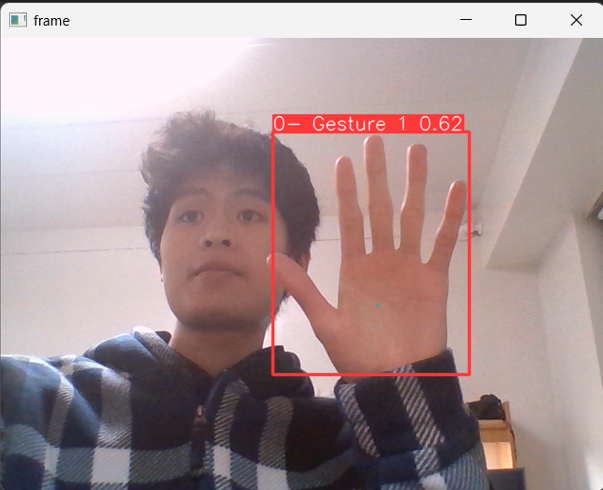

Trained on Roboflow gesture dataset combine with my self-annotated dataset, YOLOv8 proved a solid choice for gesture detection, yielded an impressive 87% mAP50 score. Bounding boxes for my gestures? Nailed it. However, this approach stopped short of individual finger coordinates, crucial for the nuanced 3D control I envisioned. This wasn't a showstopper, but a detour. Time to refine my strategy and dig deeper into hand pose estimation.

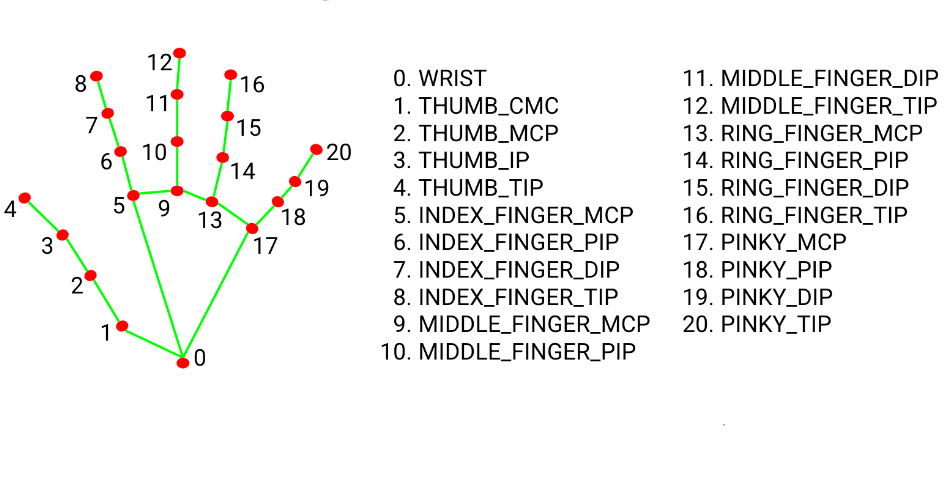

Then came MediaPipe - an open-source framework by Google offers a robust solution for extracting hand pose and finger

coordinates, what's left for me is to implemement custom gesture recognition and logic. For example, if [20] y index is smaller than [17] y index, the pinky is closed.

Therefore, a combinations of multiple booleans can predict a large number of complex gestures user trying to convey.

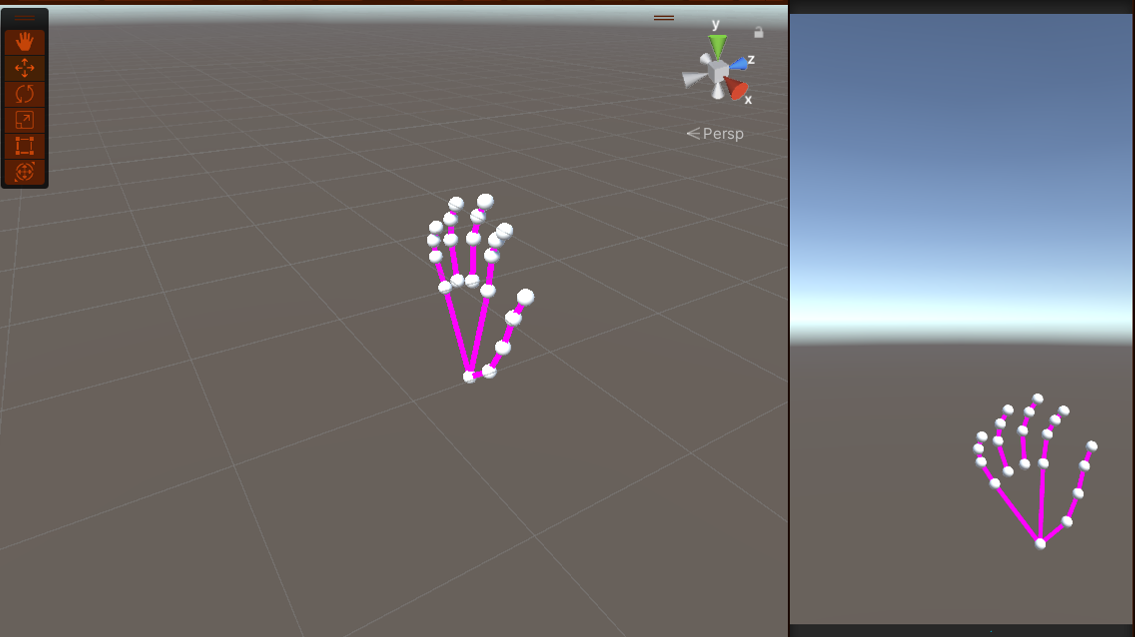

Besides interacting with computer and 3D model navigating, I have been experimenting with importing the data from MediaPipe to send and receive in

Unity environment via UDP.

Since Mediapipe only provide depth information relative to point [0]. To associate the depth of point [0] itself, I based on the distance from [5] to [17] multiply with some cofactor to

shift the hand on z-index accordingly.

The software will be able to download via .exe file on the official website. UI is developed with Python Tkinter.